What to Measure

In order to make our chatbot great we need to measure three things so that each can be improved:

- User success – do the users complete the tasks they start?

- User satisfaction – does the chatbot delight your users or do they find it frustrating?

- Chat errors – is your chatbot complete? Does it have logic or entity errors that prevent success and satisfaction?

A Sample Chatbot: CatTime

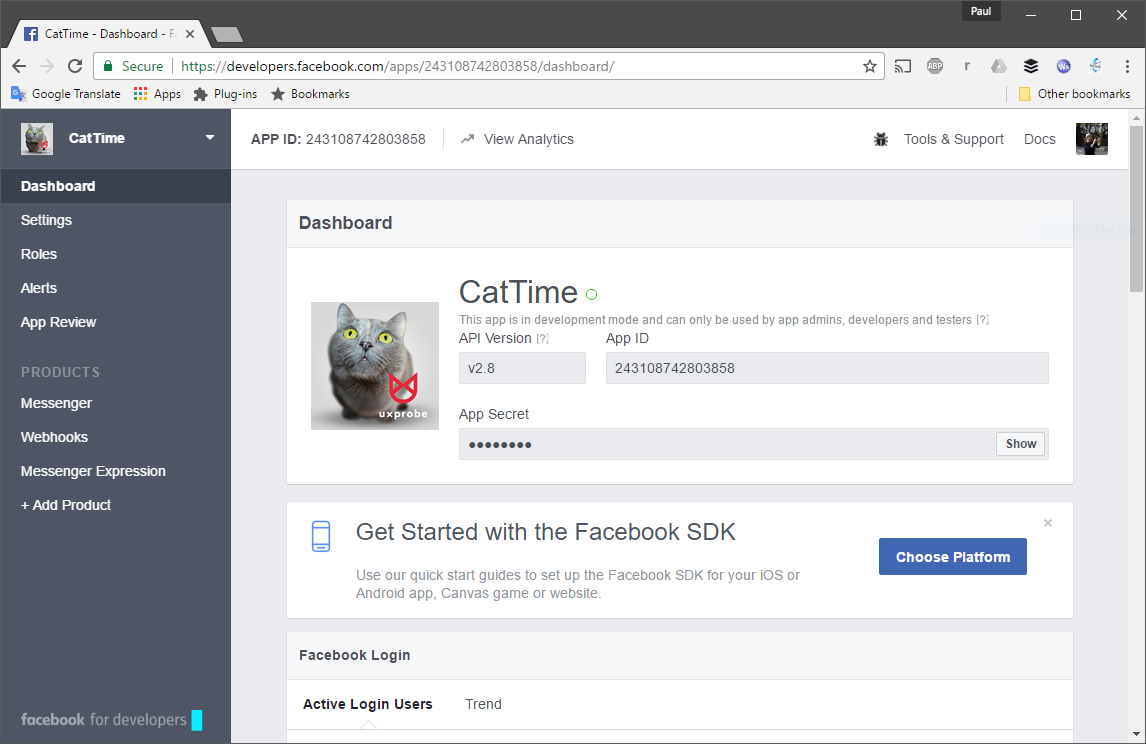

CatTime is a simple Facebook chatbot. I created it to demonstrate the three important types of measuring we need to do to make our chatbot great.

I get started on the developer console of Facebook.

Then I created my chatbot webhook. I am using python but there are lots of examples on the Facebook developer tools for PHP, Java, NodeJS, etc

Logging Sessions, Chat Text and Task Starts

Our chatbot is very simple and doesn’t use any AI or NLP (Natural Language Processing) like yours does but that doesn’t change how we measure the chatbot.

Facebook sends messages from Facebook users to our chatbot webhook. To properly track users we need to save a bit of state for each user session. I am using a memcache but you will use whatever session memory is available on your platform. When we receive the first message for the session we save this info and log the start of the session with UXprobe

session = memcache.get("cattime"+sessionID)

if session is None:

logging.info("cattime sessionID not found in memcache: "+sessionID)

session = { 'sessionID': str(uuid.uuid1()), 'taskID': None, 'sequenceID': None,

'sessionInfo': { 'product': 'CatTime', 'version': '1.0',

'startTime': uxpcurtime(), 'userID': sessionID}}

uxpSessionStart(session)

memcache.add("cattime"+sessionID, session, 600)

We save a bit of useful info that will be used for all further UXprobe logging.

Next we process the message that we received (Facebook calls them message events):

message = event['message']

logging.info("cattime received message " + str(message))

messageID = message.get('mid', None)

messageText = message.get('text', None)

We log the chat message with UXprobe:

uxpChat(session, messageText, 'user')

Then we respond. Normally this is where you would make a call out to your NLP but I am going to do something very simple:

if 'cat' in messageText.lower():

gifurl = gifyImage(messageText)

messageData['message'] = { "attachment":

{"type": "image", "payload": { "url": gifurl } }

}

if session['taskID'] is None:

session = uxpTaskStart(session, "Enjoy Cat Gifs")

uxpChat(session, gifurl, 'ai')

If the string ‘cat’ is in the message text then I send the message text as a query to http://giphy.com which is very nice to send me the URLs of 25 cat gifs of which I select one at random and send it back to the user as an image attachment. So ‘fat cat’ message sends you a gif of a fat cat, ‘grumpy cat’ sends you a gif of grumpy cat, etc It’s very soothing.

We logged two events in UXprobe from this message event. The first thing we do is start a task – ‘Enjoy Cat Gifs’. It’s important to measure tasks in your chatbot – it’s the way you can tell if it’s useful and if people get things done with it.

Second, we also logged this chat message in UXprobe and we marked it as from the ‘ai’. This will let us see both sides of the chat later on if we need to.

Logging Surveys and Task Completions

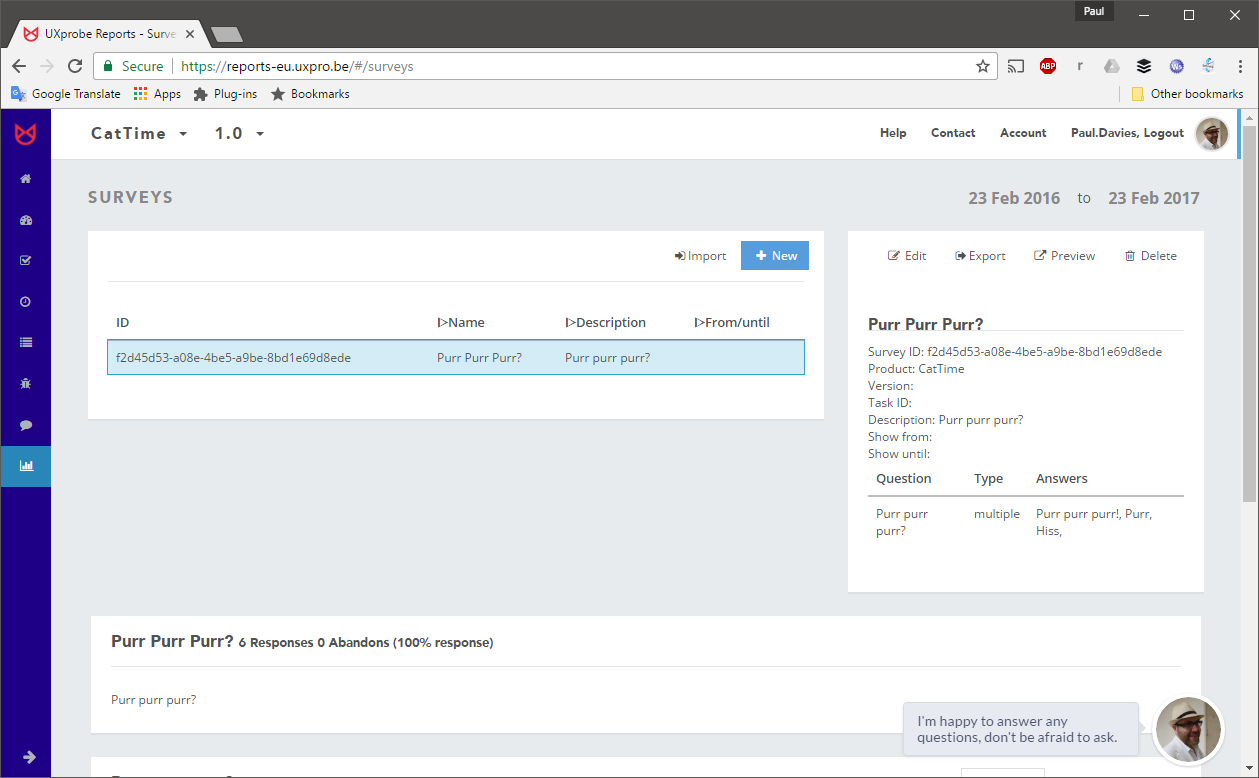

OK – good. We are logging sessions, chats and tasks. That gives us a lot. Let’s also try measuring user satisfaction and the completion of the tasks. We create a simple survey in the UXprobe dashboard called ‘Purr purr purr?’. This survey has one multiple choice question. We note the survey ID.

If we see the word ‘purr’ in the chat message then we will show the satisfaction survey. To display buttons to the user in Facebook Messenger we construct a template message with buttons:

{ "template_type": "button",

"text": "Purr purr purr?",

"buttons": [

{ "type": "postback",

"title": "Purr Purr Purr!",

"payload": '{ "surveyID":"f2d45d53-a08e-4be5-a9be-8bd1e69d8ede",

"answer":["0"] }'

},

{ "type": "postback",

"title": "Purr",

"payload": '{ "surveyID":"f2d45d53-a08e-4be5-a9be-8bd1e69d8ede",

"answer":["1"] }'

},

{ "type": "postback",

"title": "Hiss",

"payload": '{ "surveyID":"f2d45d53-a08e-4be5-a9be-8bd1e69d8ede",

"answer":["2"] }'

}

] }

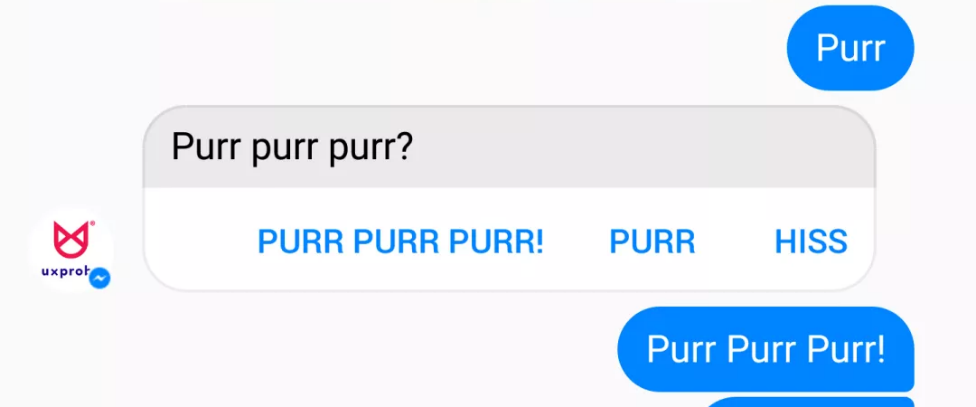

This is how the survey looks for the user:

When the user clicks on the button, Facebook sends us back our payload:

"payload":'{"surveyID":"f2d45d53-a08e-4be5-a9be-8bd1e69d8ede", "answer":["1"]}'

In our postback handler we unpack the payload and log the survey response:

if 'surveyID' in jpl and 'answer' in jpl:

uxpSurvey(session, jpl['surveyID'], jpl['answer'])

if session['taskID'] is not None:

session= uxpTaskEnd(session, session['taskID'], 'success')

We also end our task – the user has enjoyed the cat gifs. Ending a task is purely dependent on your chatbot logic and what makes sense. You can have as many different tasks as you need.

Now we have logged a survey and a task completion. From that we can understand user satisfaction – did the user enjoy the cat gifs? And we can calculate how successful the user was – did they complete the task? How long did it take? How many messages did they send before competition. This is all available in the UXprobe dashboard.

Logging Errors to Improve Quality

The last thing we will log is an error. When you are using an NLP system to understand the user messages, you will generate errors from the NLP when it cannot understand the input. A typical issue is an unknown entity. Users can make lots of synonyms for words, especially in chat and to make the chabot the best it’s good to have a killer entity dictionary. Only by tracking user chats can you get all the edge cases your users will come up with.

So – for the demo – let’s simulate an unknown entity error. When we see the text ‘woof’ in the user message, we log an error:

if 'woof' in messageText.lower():

uxpError(sender, 'bad entity', messageText, 'entity woof not allowed')

That’s simple but it will be super helpful. Let your chatbot run for a week and you will have lots of missing input for your entity database.

Wrapping Up

And that’s it – very easy and very helpful. We now can measure:

- User success – important for your stakeholders to gauge the usefulness of the chatbot

- User satisfaction – critical to understand if the chatbot is delighting or frustrating your customers

- Chatbot quality – so it can be improved

So we have three important metrics but also, we have these three metrics in relation to each other – so we can see how quality impacts user success or how user success impacts user satisfaction. That gives us actionable data to make your chatbot the best it can be.

Do you also want to improve the quality of your chatbot?

ABOUT THE AUTHOR

Paul Davies

Co-founder